Interested in reading this tutorial as one of many chapters in my GraphQL book? Checkout the entire The Road to GraphQL book that teaches you to become a fullstack developer with JavaScript.

This tutorial is part 4 of 4 in this series.

In this chapter, you will implement server-side architecture using GraphQL and Apollo Server. The GraphQL query language is implemented as a reference implementation in JavaScript by Facebook, while Apollo Server builds on it to simplify building GraphQL servers in JavaScript. Since GraphQL is a query language, its transport layer and data format is not set in stone. GraphQL isn't opinionated about it, but it is used as alternative to the popular REST architecture for client-server communication over HTTP with JSON.

In the end, you should have a fully working GraphQL server boilerplate project that implements authentication, authorization, a data access layer with a database, domain specific entities such as users and messages, different pagination strategies, and real-time abilities due to subscriptions. You can find a working solution of it, as well as a working client-side application in React, in this GitHub repository: Full-stack Apollo with React and Express Boilerplate Project. I consider it an ideal starter project to realize your own idea.

While building this application with me in the following sections, I recommend to verify your implementations with the built-in GraphQL client application (e.g. GraphQL Playground). Once you have your database setup done, you can verify your stored data over there as well. In addition, if you feel comfortable with it, you can implement a client application (in React or something else) which consumes the GraphQL API of this server. So let's get started!

Table of Contents

- Apollo Server Setup with Express

- Apollo Server: Type Definitions

- Apollo Server: Resolvers

- Apollo Server: Type Relationships

- Apollo Server: Queries and Mutations

- GraphQL Schema Stitching with Apollo Server

- PostgreSQL with Sequelize for a GraphQL Server

- Connecting Resolvers and Database

- Apollo Server: Validation and Errors

- Apollo Server: Authentication

- Authorization with GraphQL and Apollo Server

- GraphQL Custom Scalars in Apollo Server

- Pagination in GraphQL with Apollo Server

- GraphQL Subscriptions

- Testing a GraphQL Server

- Batching and Caching in GraphQL with Data Loader

- GraphQL Server + PostgreSQL Deployment to Heroku

Apollo Server Setup with Express

There are two ways to start out with this application. You can follow my guidance in this minimal Node.js setup guide step by step or you can find a starter project in this GitHub repository and follow its installation instructions.

Apollo Server can be used with several popular libraries for Node.js like Express, Koa, Hapi. It is kept library agnostic, so it's possible to connect it with many different third-party libraries in client and server applications. In this application, you will use Express, because it is the most popular and common middleware library for Node.js.

Install these two dependencies to the package.json file and node_modules folder:

npm install apollo-server apollo-server-express --save

As you can see by the library names, you can use any other middleware solution (e.g. Koa, Hapi) to complement your standalone Apollo Server. Apart from these libraries for Apollo Server, you need the core libraries for Express and GraphQL:

npm install express graphql --save

Now every library is set to get started with the source code in the src/index.js file. First, you have to import the necessary parts for getting started with Apollo Server in Express:

import express from 'express';import { ApolloServer } from 'apollo-server-express';

Second, use both imports for initializing your Apollo Server with Express:

import express from 'express';import { ApolloServer } from 'apollo-server-express';const app = express();const schema = ...const resolvers = ...const server = new ApolloServer({typeDefs: schema,resolvers,});server.applyMiddleware({ app, path: '/graphql' });app.listen({ port: 8000 }, () => {console.log('Apollo Server on http://localhost:8000/graphql');});

Using Apollo Server's applyMiddleware() method, you can opt-in any middleware, which in this case is Express. Also, you can specify the path for your GraphQL API endpoint. Beyond this, you can see how the Express application gets initialized. The only missing items are the definition for the schema and resolvers for creating the Apollo Server instance. We'll implement them first and learn about them after:

import express from 'express';import { ApolloServer, gql } from 'apollo-server-express';const app = express();const schema = gql`type Query {me: User}type User {username: String!}`;const resolvers = {Query: {me: () => {return {username: 'Robin Wieruch',};},},};...

The GraphQL schema provided to the Apollo Server is all the available data for reading and writing data via GraphQL. It can happen from any client who consumes the GraphQL API. The schema consists of type definitions, starting with a mandatory top level Query type for reading data, followed by fields and nested fields. In the schema from the Apollo Server setup, you have defined a me field, which is of the object type User. In this case, a User type has only a username field, a scalar type. There are various scalar types in the GraphQL specification for defining strings (String), booleans (Boolean), integers (Int), and more. At some point, the schema has to end at its leaf nodes with scalar types to resolve everything properly. Think about it as similar to a JavaScript object with objects or arrays inside, except it requires primitives like strings, booleans, or integers at some point.

const data = {me: {username: 'Robin Wieruch',},};

In the GraphQL schema for setting up an Apollo Server, resolvers are used to return data for fields from the schema. The data source doesn't matter, because the data can be hardcoded, can come from a database, or from another (RESTful) API endpoint. You will learn more about potential data sources later. For now, it only matters that the resolvers are agnostic according to where the data comes from, which separates GraphQL from your typical database query language. Resolvers are functions that resolve data for your GraphQL fields in the schema. In the previous example, only a user object with the username "Robin Wieruch" gets resolved from the me field.

Your GraphQL API with Apollo Server and Express should be working now. On the command line, you can always start your application with the npm start script to verify it works after you make changes. To verify it without a client application, Apollo Server comes with GraphQL Playground, a built-in client for consuming GraphQL APIs. It is found by using a GraphQL API endpoint in a browser at http://localhost:8000/graphql. In the application, define your first GraphQL query to see its result:

{me {username}}

The result for the query should look like this or your defined sample data:

{"data": {"me": {"username": "Robin Wieruch"}}}

I might not mention GraphQL Playground as much moving forward, but I leave it to you to verify your GraphQL API with it after you make changes. It is useful tool to experiment and explore your own API. Optionally, you can also add CORS to your Express middleware. First, install CORS on the command line:

npm install cors --save

Second, use it in your Express middleware:

import cors from 'cors';import express from 'express';import { ApolloServer, gql } from 'apollo-server-express';const app = express();app.use(cors());...

CORS is needed to perform HTTP requests from another domain than your server domain to your server. Otherwise you may run into cross-origin resource sharing errors for your GraphQL server.

Exercises:

- Confirm your source code for the last section

- Confirm the changes from the last section

- Read more about GraphQL

- Experiment with the schema and the resolver

- Add more fields to the user type

- Fulfill the requirements in the resolver

- Query your fields in the GraphQL Playground

- Read more about Apollo Server Standalone

- Read more about Apollo Server in Express Setup

Apollo Server: Type Definitions

This section is all about GraphQL type definitions and how they are used to define the overall GraphQL schema. A GraphQL schema is defined by its types, the relationships between the types, and their structure. Therefore GraphQL uses a Schema Definition Language (SDL). However, the schema doesn't define where the data comes from. This responsibility is handled by resolvers outside of the SDL. When you used Apollo Server before, you used a User object type within the schema and defined a resolver which returned a user for the corresponding me field.

Note the exclamation point for the username field in the User object type. It means that the username is a non-nullable field. Whenever a field of type User with a username is returned from the GraphQL schema, the user has to have a username. It cannot be undefined or null. However, there isn't an exclamation point for the user type on the me field. Does it mean that the result of the me field can be null? That is the case for this particular scenario. There shouldn't be always a user returned for the me field, because a server has to know what the field contains before it can respond. Later, you will implement an authentication mechanism (sign up, sign in, sign out) with your GraphQL server. The me field is populated with a user object like account details only when a user is authenticated with the server. Otherwise, it remains null. When you define GraphQL type definitions, there must be conscious decisions about the types, relationships, structure and (non-null) fields.

We extend the schema by extending or adding more type definitions to it, and use GraphQL arguments to handle user fields:

const schema = gql`type Query {me: Useruser(id: ID!): User}type User {username: String!}`;

GraphQL arguments can be used to make more fine-grained queries because you can provide them to the GraphQL query. Arguments can be used on a per-field level with parentheses. You must also define the type, which in this case is a non-nullable identifier to retrieve a user from a data source. The query returns the User type, which can be null because a user entity might not be found in the data source when providing a non identifiable id for it. Now you can see how two queries share the same GraphQL type, so when adding fields to it, a client can use them implicitly for both queries id field:

const schema = gql`type Query {me: Useruser(id: ID!): User}type User {id: ID!username: String!}`;

You may be wondering about the ID scalar type. The ID denotes an identifier used internally for advanced features like caching or refetching. It is a superior string scalar type. All that's missing from the new GraphQL query is the resolver, so we'll add it to the map of resolvers with sample data:

const resolvers = {Query: {me: () => {return {username: 'Robin Wieruch',};},user: () => {return {username: 'Dave Davids',};},},};

Second, make use of the incoming id argument from the GraphQL query to decide which user to return. All the arguments can be found in the second argument in the resolver function's signature:

const resolvers = {Query: {me: () => {return {username: 'Robin Wieruch',};},user: (parent, args) => {return {username: 'Dave Davids',};},},};

The first argument is called parent as well, but you shouldn't worry about it for now. Later, it will be showcased where it can be used in your resolvers. Now, to make the example more realistic, extract a map of sample users and return a user based on the id used as a key in the extracted map:

let users = {1: {id: '1',username: 'Robin Wieruch',},2: {id: '2',username: 'Dave Davids',},};const me = users[1];const resolvers = {Query: {user: (parent, { id }) => {return users[id];},me: () => {return me;},},};

Now try out your queries in GraphQL Playground:

{user(id: "2") {username}me {username}}

It should return this result:

{"data": {"user": {"username": "Dave Davids"},"me": {"username": "Robin Wieruch"}}}

Querying a list of of users will be our third query. First, add the query to the schema again:

const schema = gql`type Query {users: [User!]user(id: ID!): Userme: User}type User {id: ID!username: String!}`;

In this case, the users field returns a list of users of type User, which is denoted with the square brackets. Within the list, no user is allowed to be null, but the list itself can be null in case there are no users (otherwise, it could be also [User!]!). Once you add a new query to your schema, you are obligated to define it in your resolvers within the Query object:

const resolvers = {Query: {users: () => {return Object.values(users);},user: (parent, { id }) => {return users[id];},me: () => {return me;},},};

You have three queries that can be used in your GraphQL client (e.g. GraphQL Playground) applications. All of them operate on the same User type to fulfil the data requirements in the resolvers, so each query has to have a matching resolver. All queries are grouped under one unique, mandatory Query type, which lists all available GraphQL queries exposed to your clients as your GraphQL API for reading data. Later, you will learn about the Mutation type, for grouping a GraphQL API for writing data.

Exercises:

- Confirm your source code for the last section

- Confirm the changes from the last section

- Read more about the GraphQL schema with Apollo Server

- Read more about the GraphQL mindset: Thinking in Graphs

- Read more about nullability in GraphQL

Apollo Server: Resolvers

This section continues with the GraphQL schema in Apollo Server, but transitions more to the resolver side of the subject. In your GraphQL type definitions you have defined types, their relations and their structure. But there is nothing about how to get the data. That's where the GraphQL resolvers come into play.

In JavaScript, the resolvers are grouped in a JavaScript object, often called a resolver map. Each top level query in your Query type has to have a resolver. Now, we'll resolve things on a per-field level.

const resolvers = {Query: {users: () => {return Object.values(users);},user: (parent, { id }) => {return users[id];},me: () => {return me;},},User: {username: () => 'Hans',},};

Once you start your application again and query for a list of users, every user should have an identical username.

// query{users {usernameid}}// query result{"data": {"users": [{"username": "Hans","id": "1"},{"username": "Hans","id": "2"}]}}

The GraphQL resolvers can operate more specifically on a per-field level. You can override the username of every User type by resolving a username field. Otherwise, the default username property of the user entity is taken for it. Generally this applies to every field. Either you decide specifically what the field should return in a resolver function or GraphQL tries to fallback for the field by retrieving the property automatically from the JavaScript entity.

Let's evolve this a bit by diving into the function signatures of resolver functions. Previously, you have seen that the second argument of the resolver function is the incoming arguments of a query. That's how you were able to retrieve the id argument for the user from the Query. The first argument is called the parent or root argument, and always returns the previously resolved field. Let's check this for the new username resolver function.

const resolvers = {Query: {users: () => {return Object.values(users);},user: (parent, { id }) => {return users[id];},me: () => {return me;},},User: {username: parent => {return parent.username;}},};

When you query your list of users again in a running application, all usernames should complete correctly. That's because GraphQL first resolves all users in the users resolver, and then goes through the User's username resolver for each user. Each user is accessible as the first argument in the resolver function, so they can be used to access more properties on the entity. You can rename your parent argument to make it more explicit:

const resolvers = {Query: {...},User: {username: user => {return user.username;}},};

In this case, the username resolver function is redundant, because it only mimics the default behavior of a GraphQL resolver. If you leave it out, the username would still resolve with its correct property. However, this fine control over the resolved fields opens up powerful possibilities. It gives you the flexibility to add data mapping without worrying about the data sources behind the GraphQL layer. Here, we expose the full username of a user, a combination of its first and last name by using template literals:

const resolvers = {...User: {username: user => `${user.firstname} ${user.lastname}`,},};

For now, we are going to leave out the username resolver, because it only mimics the default behavior with Apollo Server. These are called default resolvers, because they work without explicit definitions. Next, look to the other arguments in the function signature of a GraphQL resolver:

(parent, args, context, info) => { ... }

The context argument is the third argument in the resolver function used to inject dependencies from the outside to the resolver function. Assume the signed-in user is known to the outside world of your GraphQL layer because a request to your GraphQL server is made and the authenticated user is retrieved from elsewhere. You might decide to inject this signed in user to your resolvers for application functionality, which is done with the me user for the me field. Remove the declaration of the me user (let me = ...) and pass it in the context object when Apollo Server gets initialized instead:

const server = new ApolloServer({typeDefs: schema,resolvers,context: {me: users[1],},});

Next, access it in the resolver's function signature as a third argument, which gets destructured into the me property from the context object.

const resolvers = {Query: {users: () => {return Object.values(users);},user: (parent, { id }) => {return users[id];},me: (parent, args, { me }) => {return me;},},};

The context should be the same for all resolvers now. Every resolver that needs to access the context, or in this case the me user, can do so using the third argument of the resolver function.

The fourth argument in a resolver function, the info argument, isn't used very often, because it only gives you internal information about the GraphQL request. It can be used for debugging, error handling, advanced monitoring, and tracking. You don't need to worry about it for now.

A couple of words about the resolver's return values: a resolver can return arrays, objects and scalar types, but it has to be defined in the matching type definitions. The type definition has to define an array or non-nullable field to have the resolvers working appropriately. What about JavaScript promises? Often, you will make a request to a data source (database, RESTful API) in a resolver, returning a JavaScript promise in the resolver. GraphQL can deal with it, and waits for the promise to resolve. That's why you don't need to worry about asynchronous requests to your data source later.

Exercises:

- Confirm your source code for the last section

- Confirm the changes from the last section

- Read more about GraphQL resolvers in Apollo

Apollo Server: Type Relationships

You started to evolve your GraphQL schema by defining queries, mutations, and type definitions. In this section, let's add a second GraphQL type called Message and see how it behaves with your User type. In this application, a user can have messages. Basically, you could write a simple chat application with both types. First, add two new top level queries and the new Message type to your GraphQL schema:

const schema = gql`type Query {users: [User!]user(id: ID!): Userme: Usermessages: [Message!]!message(id: ID!): Message!}type User {id: ID!username: String!}type Message {id: ID!text: String!}`;

Second, you have to add two resolvers for Apollo Server to match the two new top level queries:

let messages = {1: {id: '1',text: 'Hello World',},2: {id: '2',text: 'By World',},};const resolvers = {Query: {users: () => {return Object.values(users);},user: (parent, { id }) => {return users[id];},me: (parent, args, { me }) => {return me;},messages: () => {return Object.values(messages);},message: (parent, { id }) => {return messages[id];},},};

Once you run your application again, your new GraphQL queries should work in GraphQL playground. Now we'll add relationships to both GraphQL types. Historically, it was common with REST to add an identifier to each entity to resolve its relationship.

const schema = gql`type Query {users: [User!]user(id: ID!): Userme: Usermessages: [Message!]!message(id: ID!): Message!}type User {id: ID!username: String!}type Message {id: ID!text: String!userId: ID!}`;

With GraphQL, Instead of using an identifier and resolving the entities with multiple waterfall requests, you can use the User entity within the message entity directly:

const schema = gql`...type Message {id: ID!text: String!user: User!}`;

Since a message doesn't have a user entity in your model, the default resolver doesn't work. You need to set up an explicit resolver for it.

const resolvers = {Query: {users: () => {return Object.values(users);},user: (parent, { id }) => {return users[id];},me: (parent, args, { me }) => {return me;},messages: () => {return Object.values(messages);},message: (parent, { id }) => {return messages[id];},},Message: {user: (parent, args, { me }) => {return me;},},};

In this case, every message is written by the authenticated me user. If you query the following about messages, you will get this result:

// query{message(id: "1") {idtextuser {idusername}}}// query result{"data": {"message": {"id": "1","text": "Hello World","user": {"id": "1","username": "Robin Wieruch"}}}}

Let's make the behavior more like in a real world application. Your sample data needs keys to reference entities to each other, so the message passes a userId property:

let messages = {1: {id: '1',text: 'Hello World',userId: '1',},2: {id: '2',text: 'By World',userId: '2',},};

The parent argument in your resolver function can be used to get a message's userId, which can then be used to retrieve the appropriate user.

const resolvers = {...Message: {user: message => {return users[message.userId];},},};

Now every message has its own dedicated user. The last steps were crucial for understanding GraphQL. Even though you have default resolver functions or this fine-grained control over the fields by defining your own resolver functions, it is up to you to retrieve the data from a data source. The developer makes sure every field can be resolved. GraphQL lets you group those fields into one GraphQL query, regardless of the data source.

Let's recap this implementation detail again with another relationship that involves user messages. In this case, the relationships go in the other direction.

let users = {1: {id: '1',username: 'Robin Wieruch',messageIds: [1],},2: {id: '2',username: 'Dave Davids',messageIds: [2],},};

This sample data could come from any data source. The important part is that it has a key that defines a relationship to another entity. All of this is independent from GraphQL, so let's define the relationship from users to their messages in GraphQL.

const schema = gql`type Query {users: [User!]user(id: ID!): Userme: Usermessages: [Message!]!message(id: ID!): Message!}type User {id: ID!username: String!messages: [Message!]}type Message {id: ID!text: String!user: User!}`;

Since a user entity doesn't have messages, but message identifiers, you can write a custom resolver for the messages of a user again. In this case, the resolver retrieves all messages from the user from the list of sample messages.

const resolvers = {...User: {messages: user => {return Object.values(messages).filter(message => message.userId === user.id,);},},Message: {user: message => {return users[message.userId];},},};

This section has shown you how to expose relationships in your GraphQL schema. If the default resolvers don't work, you have to define your own custom resolvers on a per field level for resolving the data from different data sources.

Exercises:

- Confirm your source code for the last section

- Confirm the changes from the last section

- Query a list of users with their messages

- Query a list of messages with their user

- Read more about the GraphQL schema

Apollo Server: Queries and Mutations

So far, you have only defined queries in your GraphQL schema using two related GraphQL types for reading data. These should work in GraphQL Playground, because you have given them equivalent resolvers. Now we'll cover GraphQL mutations for writing data. In the following, you create two mutations: one to create a message, and one to delete it. Let's start with creating a message as the currently signed in user (the me user).

const schema = gql`type Query {users: [User!]user(id: ID!): Userme: Usermessages: [Message!]!message(id: ID!): Message!}type Mutation {createMessage(text: String!): Message!}...`;

Apart from the Query type, there are also Mutation and Subscription types. There, you can group all your GraphQL operations for writing data instead of reading it. In this case, the createMessage mutation accepts a non-nullable text input as an argument, and returns the created message. Again, you have to implement the resolver as counterpart for the mutation the same as with the previous queries, which happens in the mutation part of the resolver map:

const resolvers = {Query: {...},Mutation: {createMessage: (parent, { text }, { me }) => {const message = {text,userId: me.id,};return message;},},...};

The mutation's resolver has access to the text in its second argument. It also has access to the signed-in user in the third argument, used to associate the created message with the user. The parent argument isn't used. The one thing missing to make the message complete is an identifier. To make sure a unique identifier is used, install this neat library in the command line:

npm install uuid --save

And import it to your file:

import { v4 as uuidv4 } from 'uuid';

Now you can give your message a unique identifier:

const resolvers = {Query: {...},Mutation: {createMessage: (parent, { text }, { me }) => {const id = uuidv4();const message = {id,text,userId: me.id,};return message;},},...};

So far, the mutation creates a message object and returns it to the API. However, most mutations have side-effects, because they are writing data to your data source or performing another action. Most often, it will be a write operation to your database, but in this case, you only need to update your users and messages variables. The list of available messages needs to be updated, and the user's reference list of messageIds needs to have the new message id.

const resolvers = {Query: {...},Mutation: {createMessage: (parent, { text }, { me }) => {const id = uuidv4();const message = {id,text,userId: me.id,};messages[id] = message;users[me.id].messageIds.push(id);return message;},},...};

That's it for the first mutation. You can try it right now in GraphQL Playground:

mutation {createMessage (text: "Hello GraphQL!") {idtext}}

The last part is essentially your writing operation to a data source. In this case, you have only updated the sample data, but it would most likely be a database in practical use. Next, implement the mutation for deleting messages:

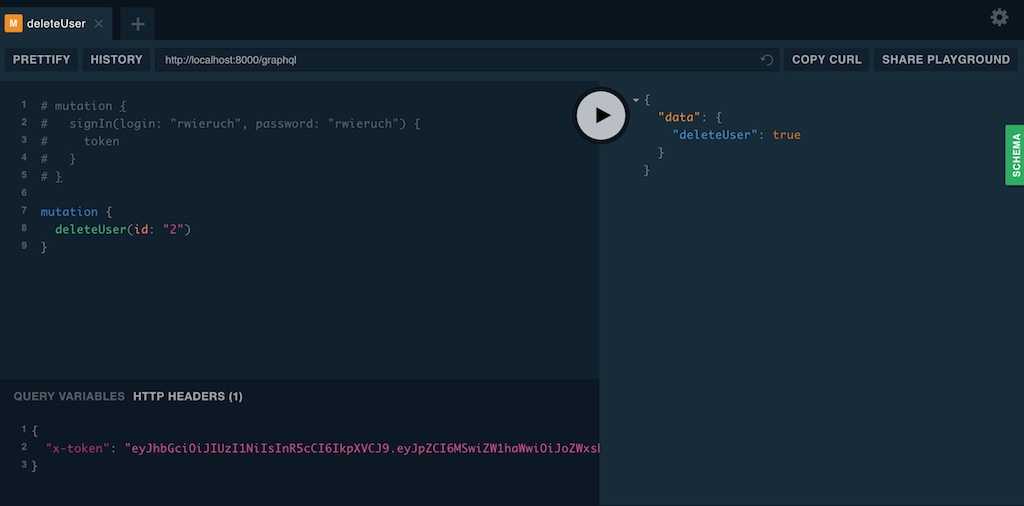

const schema = gql`type Query {users: [User!]user(id: ID!): Userme: Usermessages: [Message!]!message(id: ID!): Message!}type Mutation {createMessage(text: String!): Message!deleteMessage(id: ID!): Boolean!}...`;

The mutation returns a boolean that tells if the deletion was successful or not, and it takes an identifier as input to identify the message. The counterpart of the GraphQL schema implementation is a resolver:

const resolvers = {Query: {...},Mutation: {...deleteMessage: (parent, { id }) => {const { [id]: message, ...otherMessages } = messages;if (!message) {return false;}messages = otherMessages;return true;},},...};

The resolver finds the message by id from the messages object using destructuring. If there is no message, the resolver returns false. If there is a message, the remaining messages without the deleted message are the updated versions of the messages object. Then, the resolver returns true. Otherwise, if no message is found, the resolver returns false. Mutations in GraphQL and Apollo Server aren't much different from GraphQL queries, except they write data.

There is only one GraphQL operation missing for making the messages features complete. It is possible to read, create, and delete messages, so the only operation left is updating them as an exercise.

Exercises:

- Confirm your source code for the last section

- Confirm the changes from the last section

- Create a message in GraphQL Playground with a mutation

- Query all messages

- Query the

meuser with messages

- Delete a message in GraphQL Playground with a mutation

- Query all messages

- Query the me user with messages

- Implement an

updateMessagemutation for completing all CRUD operations for a message in GraphQL - Read more about GraphQL queries and mutations

GraphQL Schema Stitching with Apollo Server

Schema stitching is a powerful feature in GraphQL. It's about merging multiple GraphQL schemas into one schema, which may be consumed in a GraphQL client application. For now, you only have one schema in your application, but there may come a need for more complicated operations that use multiple schemas and schema stitching. For instance, assume you have a GraphQL schema you want to modularize based on domains (e.g. user, message). You may end up with two schemas, where each schema matches one type (e.g. User type, Message type). The operation requires merging both GraphQL schemas to make the entire GraphQL schema accessible with your GraphQL server's API. That's one of the basic motivations behind schema stitching.

But you can take this one step further: you may end up with microservices or third-party platforms that expose their dedicated GraphQL APIs, which then can be used to merge them into one GraphQL schema, where schema stitching becomes a single source of truth. Then again, a client can consume the entire schema, which is composed out of multiple domain-driven microservices.

In our case, let's start with a separation by technical concerns for the GraphQL schema and resolvers. Afterward, you will apply the separation by domains that are users and messages.

Technical Separation

Let's take the GraphQL schema from the application where you have a User type and Message type. In the same step, split out the resolvers to a dedicated place. The src/index.js file, where the schema and resolvers are needed for the Apollo Server instantiation, should only import both things. It becomes three things when outsourcing data, which in this case is the sample data, now called models.

import cors from 'cors';import express from 'express';import { ApolloServer } from 'apollo-server-express';import schema from './schema';import resolvers from './resolvers';import models from './models';const app = express();app.use(cors());const server = new ApolloServer({typeDefs: schema,resolvers,context: {models,me: models.users[1],},});server.applyMiddleware({ app, path: '/graphql' });app.listen({ port: 8000 }, () => {console.log('Apollo Server on http://localhost:8000/graphql');});

As an improvement, models are passed to the resolver function's as context. The models are your data access layer, which can be sample data, a database, or a third-party API. It's always good to pass those things from the outside to keep the resolver functions pure. Then, you don't need to import the models in each resolver file. In this case, the models are the sample data moved to the src/models/index.js file:

let users = {1: {id: '1',username: 'Robin Wieruch',messageIds: [1],},2: {id: '2',username: 'Dave Davids',messageIds: [2],},};let messages = {1: {id: '1',text: 'Hello World',userId: '1',},2: {id: '2',text: 'By World',userId: '2',},};export default {users,messages,};

Since you have passed the models to your Apollo Server context, they are accessible in each resolver. Next, move the resolvers to the src/resolvers/index.js file, and adjust the resolver's function signature by adding the models when they are needed to read/write users or messages.

import { v4 as uuidv4 } from 'uuid';export default {Query: {users: (parent, args, { models }) => {return Object.values(models.users);},user: (parent, { id }, { models }) => {return models.users[id];},me: (parent, args, { me }) => {return me;},messages: (parent, args, { models }) => {return Object.values(models.messages);},message: (parent, { id }, { models }) => {return models.messages[id];},},Mutation: {createMessage: (parent, { text }, { me, models }) => {const id = uuidv4();const message = {id,text,userId: me.id,};models.messages[id] = message;models.users[me.id].messageIds.push(id);return message;},deleteMessage: (parent, { id }, { models }) => {const { [id]: message, ...otherMessages } = models.messages;if (!message) {return false;}models.messages = otherMessages;return true;},},User: {messages: (user, args, { models }) => {return Object.values(models.messages).filter(message => message.userId === user.id,);},},Message: {user: (message, args, { models }) => {return models.users[message.userId];},},};

The resolvers receive all sample data as models in the context argument rather than operating directly on the sample data as before. As mentioned, it keeps the resolver functions pure. Later, you will have an easier time testing resolver functions in isolation. Next, move your schema's type definitions in the src/schema/index.js file:

import { gql } from 'apollo-server-express';export default gql`type Query {users: [User!]user(id: ID!): Userme: Usermessages: [Message!]!message(id: ID!): Message!}type Mutation {createMessage(text: String!): Message!deleteMessage(id: ID!): Boolean!}type User {id: ID!username: String!messages: [Message!]}type Message {id: ID!text: String!user: User!}`;

The technical separation is complete, but the separation by domains, where schema stitching is needed, isn't done yet. So far, you have only outsourced the schema, resolvers and data (models) from your Apollo Server instantiation file. Everything is separated by technical concerns now. You also made a small improvement for passing the models through the context, rather than importing them in resolver files.

Domain Separation

In the next step, modularize the GraphQL schema by domains (user and message). First, separate the user-related entity in its own schema definition file called src/schema/user.js:

import { gql } from 'apollo-server-express';export default gql`extend type Query {users: [User!]user(id: ID!): Userme: User}type User {id: ID!username: String!messages: [Message!]}`;

The same applies for the message schema definition in src/schema/message.js:

import { gql } from 'apollo-server-express';export default gql`extend type Query {messages: [Message!]!message(id: ID!): Message!}extend type Mutation {createMessage(text: String!): Message!deleteMessage(id: ID!): Boolean!}type Message {id: ID!text: String!user: User!}`;

Each file only describes its own entity, with a type and its relations. A relation can be a type from a different file, such as a Message type that still has the relation to a User type even though the User type is defined somewhere else. Note the extend statement on the Query and Mutation types. Since you have more than one of those types now, you need to extend the types. Next, define shared base types for them in the src/schema/index.js:

import { gql } from 'apollo-server-express';import userSchema from './user';import messageSchema from './message';const linkSchema = gql`type Query {_: Boolean}type Mutation {_: Boolean}type Subscription {_: Boolean}`;export default [linkSchema, userSchema, messageSchema];

In this file, both schemas are merged with the help of a utility called linkSchema. The linkSchema defines all types shared within the schemas. It already defines a Subscription type for GraphQL subscriptions, which may be implemented later. As a workaround, there is an empty underscore field with a Boolean type in the merging utility schema, because there is no official way of completing this action yet. The utility schema defines the shared base types, extended with the extend statement in the other domain-specific schemas.

This time, the application runs with a stitched schema instead of one global schema. What's missing are the domain separated resolver maps. Let's start with the user domain again in file in the src/resolvers/user.js file, whereas I leave out the implementation details for saving space here:

export default {Query: {users: (parent, args, { models }) => {...},user: (parent, { id }, { models }) => {...},me: (parent, args, { me }) => {...},},User: {messages: (user, args, { models }) => {...},},};

Next, add the message resolvers in the src/resolvers/message.js file:

import { v4 as uuidv4 } from 'uuid';export default {Query: {messages: (parent, args, { models }) => {...},message: (parent, { id }, { models }) => {...},},Mutation: {createMessage: (parent, { text }, { me, models }) => {...},deleteMessage: (parent, { id }, { models }) => {...},},Message: {user: (message, args, { models }) => {...},},};

Since the Apollo Server accepts a list of resolver maps too, you can import all of your resolver maps in your src/resolvers/index.js file, and export them as a list of resolver maps again:

import userResolvers from './user';import messageResolvers from './message';export default [userResolvers, messageResolvers];

Then, the Apollo Server can take the resolver list to be instantiated. Start your application again and verify that everything is working for you.

In the last section, you extracted schema and resolvers from your main file and separated both by domains. The sample data is placed in a src/models folder, where it can be migrated to a database-driven approach later. The folder structure should look similar to this:

* src/* models/* index.js* resolvers/* index.js* user.js* message.js* schema/* index.js* user.js* message.js* index.js

You now have a good starting point for a GraphQL server application with Node.js. The last implementations gave you a universally usable GraphQL boilerplate project to serve as a foundation for your own software development projects. As we continue, the focus becomes connecting GraphQL server to databases, authentication and authorization, and using powerful features like pagination.

Exercises:

- Confirm your source code for the last section

- Confirm the changes from the last section

- Read more about schema stitching with Apollo Server

- Schema stitching is only a part of schema delegation

- Read more about schema delegation

- Familiarize yourself with the motivation behind remote schemas and schema transforms

PostgreSQL with Sequelize for a GraphQL Server

To create a full-stack GraphQL application, you'll need to introduce a sophisticated data source. Sample data is fluctuant, while a database gives persistent data. In this section, you'll set up PostgreSQL with Sequelize (ORM) for Apollo Server. PostgreSQL is a SQL database whereas an alternative would be the popular NoSQL database called MongoDB (with Mongoose as ORM). The choice of tech is always opinionated. You could choose MongoDB or any other SQL/NoSQL solution over PostgreSQL, but for the sake of this application, let's stick to PostgreSQL.

This setup guide will walk you through the basic PostgreSQL setup, including installation, your first database, administrative database user setup, and essential commands. These are the things you should have accomplished after going through the instructions:

- A running installation of PostgreSQL

- A database super user with username and password

- A database created with

createdborCREATE DATABASE

You should be able to run and stop your database with the following commands:

- pg_ctl -D /usr/local/var/postgres start

- pg_ctl -D /usr/local/var/postgres stop

Use the psql command to connect to your database in the command line, where you can list databases and execute SQL statements against them. You should find a couple of these operations in the PostgreSQL setup guide, but this section will also show some of them. Consider performing these in the same way you've been completing GraphQL operations with GraphQL Playground. The psql command line interface and GraphQL Playground are effective tools for testing applications manually.

Once you have installed PostgreSQL on your local machine, you'll also want to acquire PostgreSQL for Node.js and Sequelize (ORM) for your project. I highly recommend you keep the Sequelize documentation open, as it will be useful for reference when you connect your GraphQL layer (resolvers) with your data access layer (Sequelize).

npm install pg sequelize --save

Now you can create models for the user and message domains. Models are usually the data access layer in applications. Then, set up your models with Sequelize to make read and write operations to your PostgreSQL database. The models can then be used in GraphQL resolvers by passing them through the context object to each resolver. These are the essential steps:

- Creating a model for the user domain

- Creating a model for the message domain

- Connecting the application to a database

- Providing super user's username and password

- Combining models for database use

- Synchronizing the database once application starts

First, implement the src/models/user.js model:

const user = (sequelize, DataTypes) => {const User = sequelize.define('user', {username: {type: DataTypes.STRING,},});User.associate = models => {User.hasMany(models.Message, { onDelete: 'CASCADE' });};return User;};export default user;

Next, implement the src/models/message.js model:

const message = (sequelize, DataTypes) => {const Message = sequelize.define('message', {text: {type: DataTypes.STRING,},});Message.associate = models => {Message.belongsTo(models.User);};return Message;};export default message;

Both models define the shapes of their entities. The message model has a database column with the name text of type string. You can add multiple database columns horizontally to your model. All columns of a model make up a table row in the database, and each row reflects a database entry, such as a message or user. The database table name is defined by an argument in the Sequelize model definition. The message domain has the table "message". You can define relationships between entities with Sequelize using associations. In this case, a message entity belongs to one user, and that user has many messages. That's a minimal database setup with two domains, but since we're focusing on server-side GraphQL, you should consider reading more about databases subjects outside of these applications to fully grasp the concept.

Next, connect to your database from within your application in the src/models/index.js file. We'll need the database name, a database super user, and the user's password. You may also want to define a database dialect, because Sequelize supports other databases as well.

import Sequelize from 'sequelize';const sequelize = new Sequelize(process.env.DATABASE,process.env.DATABASE_USER,process.env.DATABASE_PASSWORD,{dialect: 'postgres',},);export { sequelize };

Note: To access the environment variables in your source code, install and add the dotenv package as described in this setup tutorial.

In the same file, you can physically associate all your models with each other to expose them to your application as data access layer (models) for the database.

import Sequelize from 'sequelize';const sequelize = new Sequelize(process.env.DATABASE,process.env.DATABASE_USER,process.env.DATABASE_PASSWORD,{dialect: 'postgres',},);const models = {User: sequelize.import('./user'),Message: sequelize.import('./message'),};Object.keys(models).forEach(key => {if ('associate' in models[key]) {models[key].associate(models);}});export { sequelize };export default models;

The database credentials--database name, database super user name, database super user password--can be stored as environment variables. In your .env file, add those credentials as key value pairs. My defaults for local development are:

DATABASE=postgresDATABASE_USER=postgresDATABASE_PASSWORD=postgres

You set up environment variables when you started creating this application. If not, you can also leave credentials in the source code for now. Finally, the database needs to be migrated/synchronized once your Node.js application starts. To complete this operation in your src/index.js file:

import express from 'express';import { ApolloServer } from 'apollo-server-express';import schema from './schema';import resolvers from './resolvers';import models, { sequelize } from './models';...sequelize.sync().then(async () => {app.listen({ port: 8000 }, () => {console.log('Apollo Server on http://localhost:8000/graphql');});});

We've completed the database setup for a GraphQL server. Next, you'll replace the business logic in your resolvers, because that is where Sequelize is used to access the database instead the sample data. The application isn't quite complete, because the resolvers don't use the new data access layer.

Exercises:

- Confirm your source code for the last section

- Confirm the changes from the last section

- Familiarize yourself with databases

- Try the

psqlcommand-line interface to access a database - Check the Sequelize API by reading through their documentation

- Look up any unfamiliar database jargon mentioned here.

- Try the

Connecting Resolvers and Database

Your PostgreSQL database is ready to connect to a GraphQL server on startup. Now, instead of using the sample data, you will use data access layer (models) in GraphQL resolvers for reading and writing data to and from a database. In the next section, we will cover the following:

- Use the new models in your GraphQL resolvers

- Seed your database with data when your application starts

- Add a user model method for retrieving a user by username

- Learn the essentials about

psqlfor the command line

Let's start by refactoring the GraphQL resolvers. You passed the models via Apollo Server's context object to each GraphQL resolver earlier. We used sample data before, but the Sequelize API is necessary for our real-word database operations. In the src/resolvers/user.js file, change the following lines of code to use the Sequelize API:

export default {Query: {users: async (parent, args, { models }) => {return await models.User.findAll();},user: async (parent, { id }, { models }) => {return await models.User.findByPk(id);},me: async (parent, args, { models, me }) => {return await models.User.findByPk(me.id);},},User: {messages: async (user, args, { models }) => {return await models.Message.findAll({where: {userId: user.id,},});},},};

The findAll() and findByPk() are commonly used Sequelize methods for database operations. Finding all messages for a specific user is more specific, though. Here, you used the where clause to narrow down messages by the userId entry in the database. Accessing a database will add another layer of complexity to your application's architecture, so be sure to reference the Sequelize API documentation as much as needed going forward.

Next, return to the src/resolvers/message.js file and perform adjustments to use the Sequelize API:

export default {Query: {messages: async (parent, args, { models }) => {return await models.Message.findAll();},message: async (parent, { id }, { models }) => {return await models.Message.findByPk(id);},},Mutation: {createMessage: async (parent, { text }, { me, models }) => {return await models.Message.create({text,userId: me.id,});},deleteMessage: async (parent, { id }, { models }) => {return await models.Message.destroy({ where: { id } });},},Message: {user: async (message, args, { models }) => {return await models.User.findByPk(message.userId);},},};

Apart from the findByPk() and findAll() methods, you are creating and deleting a message in the mutations as well. Before, you had to generate your own identifier for the message, but now Sequelize takes care of adding a unique identifier to your message once it is created in the database.

There was one more crucial change in the two files: async/await. Sequelize is a JavaScript promise-based ORM, so it always returns a JavaScript promise when operating on a database. That's where async/await can be used as a more readable version for asynchronous requests in JavaScript. You learned about the returned results of GraphQL resolvers in Apollo Server in a previous section. A result can be a JavaScript promise as well, because the resolvers are waiting for its actual result. In this case, you can also get rid of the async/await statements and your resolvers would still work. Sometimes it is better to be more explicit, however, especially when we add more business logic within the resolver's function body later, so we will keep the statements for now.

Now we'll shift to seeding the database with sample data when your applications starts with npm start. Once your database synchronizes before your server listens, you can create two user records manually with messages in your database. The following code for the src/index.js file shows how to perform these operations with async/await. Users will have a username with associated messages.

...const eraseDatabaseOnSync = true;sequelize.sync({ force: eraseDatabaseOnSync }).then(async () => {if (eraseDatabaseOnSync) {createUsersWithMessages();}app.listen({ port: 8000 }, () => {console.log('Apollo Server on http://localhost:8000/graphql');});});const createUsersWithMessages = async () => {await models.User.create({username: 'rwieruch',messages: [{text: 'Published the Road to learn React',},],},{include: [models.Message],},);await models.User.create({username: 'ddavids',messages: [{text: 'Happy to release ...',},{text: 'Published a complete ...',},],},{include: [models.Message],},);};

The force flag in your Sequelize sync() method can be used to seed the database on every application startup. You can either remove the flag or set it to false if you want to keep accumulated database changes over time. The flag should be removed for your production database at some point.

Next, we have to handle the me user. Before, you used one of the users from the sample data; now, the user will come from a database. It's a good opportunity to write a custom method for your user model in the src/models/user.js file:

const user = (sequelize, DataTypes) => {const User = sequelize.define('user', {username: {type: DataTypes.STRING,},});User.associate = models => {User.hasMany(models.Message, { onDelete: 'CASCADE' });};User.findByLogin = async login => {let user = await User.findOne({where: { username: login },});if (!user) {user = await User.findOne({where: { email: login },});}return user;};return User;};export default user;

The findByLogin() method on your user model retrieves a user by username or by email entry. You don't have an email entry on the user yet, but it will be added when the application has an authentication mechanism. The login argument is used for both username and email, for retrieving the user from the database, and you can see how it is used to sign in to an application with username or email.

You have introduced your first custom method on a database model. It is always worth considering where to put this business logic. When giving your model these access methods, you may end up with a concept called fat models. An alternative would be writing separate services like functions or classes for these data access layer functionalities.

The new model method can be used to retrieve the me user from the database. Then you can put it into the context object when the Apollo Server is instantiated in the src/index.js file:

const server = new ApolloServer({typeDefs: schema,resolvers,context: {models,me: models.User.findByLogin('rwieruch'),},});

However, this cannot work yet, because the user is read asynchronously from the database, so me would be a JavaScript promise rather than the actual user; and because you may want to retrieve the me user on a per-request basis from the database. Otherwise, the me user has to stay the same after the Apollo Server is created. Instead, use a function that returns the context object rather than an object for the context in Apollo Server. This function uses the async/await statements. The function is invoked every time a request hits your GraphQL API, so the me user is retrieved from the database with every request.

const server = new ApolloServer({typeDefs: schema,resolvers,context: async () => ({models,me: await models.User.findByLogin('rwieruch'),}),});

You should be able to start your application again. Try out different GraphQL queries and mutations in GraphQL Playground, and verify that everything is working for you. If there are any errors regarding the database, make sure that it is properly connected to your application and that the database is running on the command line too.

Since you have introduced a database now, GraphQL Playground is not the only manual testing tool anymore. Whereas GraphQL Playground can be used to test your GraphQL API, you may want to use the psql command line interface to query your database manually. For instance, you may want to check user message records in the database or whether a message exists there after it has been created with a GraphQL mutation. First, connect to your database on the command line:

psql mydatabase

And second, try the following SQL statements. It's the perfect opportunity to learn more about SQL itself:

SELECT * from users;SELECT text from messages;

Which leads to:

mydatabase=# SELECT * from users;id | username | createdAt | updatedAt----+----------+----------------------------+----------------------------1 | rwieruch | 2018-08-21 21:15:38.758+08 | 2018-08-21 21:15:38.758+082 | ddavids | 2018-08-21 21:15:38.786+08 | 2018-08-21 21:15:38.786+08(2 rows)mydatabase=# SELECT text from messages;text-----------------------------------Published the Road to learn ReactHappy to release ...Published a complete ...(3 rows)

Every time you perform GraphQL mutations, it is wise to check your database records with the psql command-line interface. It is a great way to learn about SQL, which is normally abstracted away by using an ORM such as Sequelize.

In this section, you have used a PostgreSQL database as data source for your GraphQL server, using Sequelize as the glue between your database and your GraphQL resolvers. However, this was only one possible solution. Since GraphQL is data source agnostic, you can opt-in any data source to your resolvers. It could be another database (e.g. MongoDB, Neo4j, Redis), multiple databases, or a (third-party) REST/GraphQL API endpoint. GraphQL only ensures all fields are validated, executed, and resolved when there is an incoming query or mutation, regardless of the data source.

Exercises:

- Confirm your source code for the last section

- Confirm the changes from the last section

- Experiment with psql and the seeding of your database

- Experiment with GraphQL playground and query data which comes from a database now

- Remove and add the async/await statements in your resolvers and see how they still work

- Read more about GraphQL execution

Apollo Server: Validation and Errors

Validation, error, and edge case handling are not often verbalized in programming. This section should give you some insights into these topics for Apollo Server and GraphQL. With GraphQL, you are in charge of what returns from GraphQL resolvers. It isn't too difficult inserting business logic into your resolvers, for instance, before they read from your database.

export default {Query: {users: async (parent, args, { models }) => {return await models.User.findAll();},user: async (parent, { id }, { models }) => {return await models.User.findByPk(id);},me: async (parent, args, { models, me }) => {if (!me) {return null;}return await models.User.findByPk(me.id);},},...};

It may be a good idea keeping the resolvers surface slim but adding business logic services on the side. Then it is always simple to reason about the resolvers. In this application, we keep the business logic in the resolvers to keep everything at one place and avoid scattering logic across the entire application.

Let's start with the validation, which will lead to error handling. GraphQL isn't directly concerned about validation, but it operates between tech stacks that are: the client application (e.g. showing validation messages) and the database (e.g. validation of entities before writing to the database).

Let's add some basic validation rules to your database models. This section gives an introduction to the topic, as it would become too verbose to cover all uses cases in this application. First, add validation to your user model in the src/models/user.js file:

const user = (sequelize, DataTypes) => {const User = sequelize.define('user', {username: {type: DataTypes.STRING,unique: true,allowNull: false,validate: {notEmpty: true,},},});...return User;};export default user;

Next, add validation rules to your message model in the src/models/message.js file:

const message = (sequelize, DataTypes) => {const Message = sequelize.define('message', {text: {type: DataTypes.STRING,validate: { notEmpty: true },},});Message.associate = models => {Message.belongsTo(models.User);};return Message;};export default message;

Now, try to create a message with an empty text in GraphQL Playground. It still requires a non-empty text for your message in the database. The same applies to your user entities, which now require a unique username. GraphQL and Apollo Server can handle these cases. Let's try to create a message with an empty text. You should see a similar input and output:

// mutationmutation {createMessage(text: "") {id}}// mutation error result{"data": null,"errors": [{"message": "Validation error: Validation notEmpty on text failed","locations": [],"path": ["createMessage"],"extensions": { ... }}]}

It seems like Apollo Server's resolvers make sure to transform JavaScript errors into valid GraphQL output. It is already possible to use this common error format in your client application without any additional error handling.

If you want to add custom error handling to your resolver, you always can add the commonly try/catch block statements for async/await:

export default {Query: {...},Mutation: {createMessage: async (parent, { text }, { me, models }) => {try {return await models.Message.create({text,userId: me.id,});} catch (error) {throw new Error(error);}},...},...};

The error output for GraphQL should stay the same in GraphQL Playground, because you used the same error object to generate the Error instance. However, you could also use your custom message here with throw new Error('My error message.');.

Another way of adjusting your error message is in the database model definition. Each validation rule can have a custom validation message, which can be defined in the Sequelize model:

const message = (sequelize, DataTypes) => {const Message = sequelize.define('message', {text: {type: DataTypes.STRING,validate: {notEmpty: {args: true,msg: 'A message has to have a text.',},},},});Message.associate = models => {Message.belongsTo(models.User);};return Message;};export default message;

This would lead to the following error(s) when attempting to create a message with an empty text. Again, it is straightforward in your client application, because the error format stays the same:

{"data": null,"errors": [{"message": "SequelizeValidationError: Validation error: A message has to have a text.","locations": [],"path": ["createMessage"],"extensions": { ... }}]}

That's one of the main benefits of using Apollo Server for GraphQL. Error handling is often free, because an error--be it from the database, a custom JavaScript error or another third-party--gets transformed into a valid GraphQL error result. On the client side, you don't need to worry about the error result's shape, because it comes in a common GraphQL error format where the data object is null but the errors are captured in an array. If you want to change your custom error, you can do it on a resolver per-resolver basis. Apollo Server comes with a solution for global error handling:

const server = new ApolloServer({typeDefs: schema,resolvers,formatError: error => {// remove the internal sequelize error message// leave only the important validation errorconst message = error.message.replace('SequelizeValidationError: ', '').replace('Validation error: ', '');return {...error,message,};},context: async () => ({models,me: await models.User.findByLogin('rwieruch'),}),});

These are the essentials for validation and error handling with GraphQL in Apollo Server. Validation can happen on a database (model) level or on a business logic level (resolvers). It can happen on a directive level too (see exercises). If there is an error, GraphQL and Apollo Server will format it to work with GraphQL clients. You can also format errors globally in Apollo Server.

Exercises:

- Confirm your source code for the last section

- Confirm the changes from the last section

- Add more validation rules to your database models

- Read more about validation in the Sequelize documentation

- Read more about Error Handling with Apollo Server

- Get to know the different custom errors in Apollo Server

- Read more about GraphQL field level validation with custom directives

- Read more about custom schema directives

Apollo Server: Authentication

Authentication in GraphQL is a popular topic. There is no opinionated way of doing it, but many people need it for their applications. GraphQL itself isn't opinionated about authentication since it is only a query language. If you want authentication in GraphQL, consider using GraphQL mutations. In this section, we use a minimalistic approach to add authentication to your GraphQL server. Afterward, it should be possible to register (sign up) and login (sign in) a user to your application. The previously used me user will be the authenticated user.

In preparation for the authentication mechanism with GraphQL, extend the user model in the src/models/user.js file. The user needs an email address (as unique identifier) and a password. Both email address and username (another unique identifier) can be used to sign in to the application, which is why both properties were used for the user's findByLogin() method.

...const user = (sequelize, DataTypes) => {const User = sequelize.define('user', {username: {type: DataTypes.STRING,unique: true,allowNull: false,validate: {notEmpty: true,},},email: {type: DataTypes.STRING,unique: true,allowNull: false,validate: {notEmpty: true,isEmail: true,},},password: {type: DataTypes.STRING,allowNull: false,validate: {notEmpty: true,len: [7, 42],},},});...return User;};export default user;

The two new entries for the user model have their own validation rules, same as before. The password of a user should be between 7 and 42 characters, and the email should have a valid email format. If any of these validations fails during user creation, it generates a JavaScript error, transforms and transfers the error with GraphQL. The registration form in the client application could display the validation error then.

You may want to add the email, but not the password, to your GraphQL user schema in the src/schema/user.js file too:

import { gql } from 'apollo-server-express';export default gql`...type User {id: ID!username: String!email: String!messages: [Message!]}`;

Next, add the new properties to your seed data in the src/index.js file:

const createUsersWithMessages = async () => {await models.User.create({username: 'rwieruch',email: 'hello@robin.com',password: 'rwieruch',messages: [ ... ],},{include: [models.Message],},);await models.User.create({username: 'ddavids',email: 'hello@david.com',password: 'ddavids',messages: [ ... ],},{include: [models.Message],},);};

That's the data migration of your database to get started with GraphQL authentication.

Registration (Sign Up) with GraphQL

Now, let's examine the details for GraphQL authentication. You will implement two GraphQL mutations: one to register a user, and one to log in to the application. Let's start with the sign up mutation in the src/schema/user.js file:

import { gql } from 'apollo-server-express';export default gql`extend type Query {users: [User!]user(id: ID!): Userme: User}extend type Mutation {signUp(username: String!email: String!password: String!): Token!}type Token {token: String!}type User {id: ID!username: String!messages: [Message!]}`;

The signUp mutation takes three non-nullable arguments: username, email, and password. These are used to create a user in the database. The user should be able to take the username or email address combined with the password to enable a successful login.

Now we'll consider the return type of the signUp mutation. Since we are going to use a token-based authentication with GraphQL, it is sufficient to return a token that is nothing more than a string. However, to distinguish the token in the GraphQL schema, it has its own GraphQL type. You will learn more about tokens in the following, because the token is all about the authentication mechanism for this application.

First, add the counterpart for your new mutation in the GraphQL schema as a resolver function. In your src/resolvers/user.js file, add the following resolver function that creates a user in the database and returns an object with the token value as string.

const createToken = async (user) => {...};export default {Query: {...},Mutation: {signUp: async (parent,{ username, email, password },{ models },) => {const user = await models.User.create({username,email,password,});return { token: createToken(user) };},},...};

That's the GraphQL framework around a token-based registration. You created a GraphQL mutation and resolver for it, which creates a user in the database based on certain validations and its incoming resolver arguments. It creates a token for the registered user. For now, the set up is sufficient to create a new user with a GraphQL mutation.

Securing Passwords with Bcrypt

There is one major security flaw in this code: the user password is stored in plain text in the database, which makes it much easier for third parties to access it. To remedy this, we use add-ons like bcrypt to hash passwords. First, install it on the command line:

npm install bcrypt --save

Note: If you run into any problems with bcrypt on Windows while installing it, you can try out a substitute called bcrypt.js. It is slower, but people reported that it works on their machine.

Now it is possible to hash the password with bcrypt in the user's resolver function when it gets created on a signUp mutation. There is also an alternative way with Sequelize. In your user model, define a hook function that is executed every time a user entity is created:

const user = (sequelize, DataTypes) => {const User = sequelize.define('user', {...});...User.beforeCreate(user => {...});return User;};export default user;

In this hook function, add the functionalities to alter your user entity's properties before they reach the database. Let's do it for the hashed password by using bcrypt.

import bcrypt from 'bcrypt';const user = (sequelize, DataTypes) => {const User = sequelize.define('user', {...});...User.beforeCreate(async user => {user.password = await user.generatePasswordHash();});User.prototype.generatePasswordHash = async function() {const saltRounds = 10;return await bcrypt.hash(this.password, saltRounds);};return User;};export default user;

The bcrypt hash() method takes a string--the user's password--and an integer called salt rounds. Each salt round makes it more costly to hash the password, which makes it more costly for attackers to decrypt the hash value. A common value for salt rounds nowadays ranged from 10 to 12, as increasing the number of salt rounds might cause performance issues both ways.

In this implementation, the generatePasswordHash() function is added to the user's prototype chain. That's why it is possible to execute the function as method on each user instance, so you have the user itself available within the method as this. You can also take the user instance with its password as an argument, which I prefer, though using JavaScript's prototypal inheritance a good tool for any web developer. For now, the password is hashed with bcrypt before it gets stored every time a user is created in the database,.

Token based Authentication in GraphQL

We still need to implement the token based authentication. So far, there is only a placeholder in your application for creating the token that is returned on a sign up and sign in mutation. A signed in user can be identified with this token, and is allowed to read and write data from the database. Since a registration will automatically lead to a login, the token is generated in both phases.

Next are the implementation details for the token-based authentication in GraphQL. Regardless of GraphQL, you are going to use a JSON web token (JWT) to identify your user. The definition for a JWT from the official website says: JSON Web Tokens are an open, industry standard RFC 7519 method for representing claims securely between two parties. In other words, a JWT is a secure way to handle the communication between two parties (e.g. a client and a server application). If you haven't worked on security related applications before, the following section will guide you through the process, and you'll see the token is just a secured JavaScript object with user information.

To create JWT in this application, we'll use the popular jsonwebtoken node package. Install it on the command line:

npm install jsonwebtoken --save

Now, import it in your src/resolvers/user.js file and use it to create the token:

import jwt from 'jsonwebtoken';const createToken = async user => {const { id, email, username } = user;return await jwt.sign({ id, email, username });};...

The first argument to "sign" a token can be any user information except sensitive data like passwords, because the token will land on the client side of your application stack. Signing a token means putting data into it, which you've done, and securing it, which you haven't done yet. To secure your token, pass in a secret (any long string) that is only available to you and your server. No third-party entities should have access, because it is used to encode (sign) and decode your token.

Add the secret to your environment variables in the .env file:

DATABASE=postgresDATABASE_USER=postgresDATABASE_PASSWORD=postgresSECRET=wr3r23fwfwefwekwself.2456342.dawqdq

Then, in the src/index.js file, pass the secret via Apollo Server's context to all resolver functions:

const server = new ApolloServer({typeDefs: schema,resolvers,...context: async () => ({models,me: await models.User.findByLogin('rwieruch'),secret: process.env.SECRET,}),});

Next, use it in your signUp resolver function by passing it to the token creation. The sign method of JWT handles the rest. You can also pass in a third argument for setting an expiration time or date for a token. In this case, the token is only valid for 30 minutes, after which a user has to sign in again.

import jwt from 'jsonwebtoken';const createToken = async (user, secret, expiresIn) => {const { id, email, username } = user;return await jwt.sign({ id, email, username }, secret, {expiresIn,});};export default {Query: {...},Mutation: {signUp: async (parent,{ username, email, password },{ models, secret },) => {const user = await models.User.create({username,email,password,});return { token: createToken(user, secret, '30m') };},},...};

Now you have secured your information in the token as well. If you would want to decode it, in order to access the secured data (the first argument of the sign method), you would need the secret again. Furthermore, the token is only valid for 30 minutes.

That's it for the registration: you are creating a user and returning a valid token that can be used from the client application to authenticate the user. The server can decode the token that comes with every request and allows the user to access sensitive data. You can try out the registration with GraphQL Playground, which should create a user in the database and return a token for it. Also, you can check your database with psql to test if the use was created and with a hashed password.

Login (Sign In) with GraphQL

Before you dive into the authorization with the token on a per-request basis, let's implement the second mutation for the authentication mechanism: the signIn mutation (or login mutation). Again, first we add the GraphQL mutation to your user's schema in the src/schema/user.js file:

import { gql } from 'apollo-server-express';export default gql`...extend type Mutation {signUp(username: String!email: String!password: String!): Token!signIn(login: String!, password: String!): Token!}type Token {token: String!}...`;

Second, add the resolver counterpart to your src/resolvers/user.js file:

import jwt from 'jsonwebtoken';import { AuthenticationError, UserInputError } from 'apollo-server';...export default {Query: {...},Mutation: {signUp: async (...) => {...},signIn: async (parent,{ login, password },{ models, secret },) => {const user = await models.User.findByLogin(login);if (!user) {throw new UserInputError('No user found with this login credentials.',);}const isValid = await user.validatePassword(password);if (!isValid) {throw new AuthenticationError('Invalid password.');}return { token: createToken(user, secret, '30m') };},},...};

Let's go through the new resolver function for the login step by step. As arguments, the resolver has access to the input arguments from the GraphQL mutation (login, password) and the context (models, secret). When a user tries to sign in to your application, the login, which can be either the unique username or unique email, is taken to retrieve a user from the database. If there is no user, the application throws an error that can be used in the client application to notify the user. If there is an user, the user's password is validated. You will see this method on the user model in the next example. If the password is not valid, the application throws an error to the client application. If the password is valid, the signIn mutation returns a token identical to the signUp mutation. The client application either performs a successful login or shows an error message for invalid credentials. You can also see specific Apollo Server Errors used over generic JavaScript Error classes.

Next, we want to implement the validatePassword() method on the user instance. Place it in the src/models/user.js file, because that's where all the model methods for the user are stored, same as the findByLogin() method.

import bcrypt from 'bcrypt';const user = (sequelize, DataTypes) => {...User.findByLogin = async login => {let user = await User.findOne({where: { username: login },});if (!user) {user = await User.findOne({where: { email: login },});}return user;};User.beforeCreate(async user => {user.password = await user.generatePasswordHash();});User.prototype.generatePasswordHash = async function() {const saltRounds = 10;return await bcrypt.hash(this.password, saltRounds);};User.prototype.validatePassword = async function(password) {return await bcrypt.compare(password, this.password);};return User;};export default user;

Again, it's a prototypical JavaScript inheritance for making a method available in the user instance. In this method, the user (this) and its password can be compared with the incoming password from the GraphQL mutation using bcrypt, because the password on the user is hashed, and the incoming password is plain text. Fortunately, bcrypt will tell you whether the password is correct or not when a user signs in.

Now you have set up registration (sign up) and login (sign in) for your GraphQL server application. You used bcrypt to hash and compare a plain text password before it reaches the database with a Sequelize hook function, and you used JWT to encrypt user data with a secret to a token. Then the token is returned on every sign up and sign in. Then the client application can save the token (e.g. local storage of the browser) and send it along with every GraphQL query and mutation as authorization.

The next section will teach you about authorization in GraphQL on the server-side, and what should you do with the token once a user is authenticated with your application after a successful registration or login.

Exercises:

- Confirm your source code for the last section

- Confirm the changes from the last section

- Register (sign up) a new user with GraphQL Playground

- Check your users and their hashed passwords in the database with

psql - Read more about JSON web tokens (JWT)

- Login (sign in) a user with GraphQL Playground

- copy and paste the token to the interactive token decoding on the JWT website (conclusion: the information itself isn't secure, that's why you shouldn't put a password in the token)

Authorization with GraphQL and Apollo Server